A caching solution is always necessary if you want to build scalable applications which will handle lots of users and heavy data access without requiring more hardware resources. I’ve already talked about caching when using Entity Framework, but is there a way to use caching in Linq to SQL and what are the problems one might face.

To explore caching in Linq to SQL I’ve built a small web site that had a single page. This page used Linq To SQL to access the AdventureWorks sample Database which you can download from Codeplex.

To explore caching in Linq to SQL I’ve built a small web site that had a single page. This page used Linq To SQL to access the AdventureWorks sample Database which you can download from Codeplex.

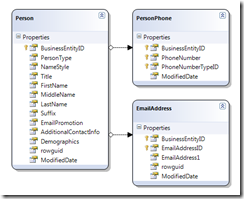

The very simple Linq To SQL model that I’ve created had three entities, a Peson and its Phones and Email addresses.

The site default page contained a repeater that was databound to the first Person that his last name begun with the letter M, fetched by a call to the GetPerson method.

public partial class _Default : System.Web.UI.Page

{

protected void Page_Load(object sender, EventArgs e)

{

if (!IsPostBack)

{

DataBind();

}

}

public override void DataBind()

{

base.DataBind();

employeesRepeater.DataSource = new List<Person>() { GetPerson("M") };

employeesRepeater.DataBind();

}

protected Person GetPerson(string lastNameStartsWith)

{

using (AdventureworksDataContext context = new AdventureworksDataContext())

{

return context.Persons.Where(p => p.LastName.StartsWith(lastNameStartsWith)).FirstOrDefault();

}

}

}

This works, but imagine tens of thousand of users accessing this page at the same time asking for the same thing. This would result in thousand of SQL commands reaching SQL server. And in this case the SQL command is trivial but what would happen if the query was more complex? The answer is that this page would become significantly slower as more users would try to access it.

To overcome this, I’ve introduced a simple Caching mechanism to the page utilizing the System.Web.Caching.Cache Object of the Page and changed the GetPerson method to use this caching mechanism.

protected Person GetPerson(string lastNameStartsWith)

{

using (AdventureworksDataContext context = new AdventureworksDataContext())

{

return CacheGet<Person>(

() => context.Persons.Where(p => p.LastName.StartsWith(lastNameStartsWith)).FirstOrDefault(),

string.Format("Person_LastName_StartsWith:{0}", lastNameStartsWith));

}

}

protected T CacheGet<T>(Func<T> loader, string cacheKey) where T : class

{

var cachedObject = this.Cache[cacheKey] as T;

if (cachedObject == null)

{

cachedObject = loader.Invoke();

}

return cachedObject;

}

Now only the first call to the GetPerson method results into a query being made to the SQL Server, all resulting calls will be served by the Cache (that is of course if the cache key aka last name character matches) and its going to be equally fast for either one user or million of users. So what’s the problem? Anyone care to guess?

To be continued…

Nice post 🙂

If the Persons table is changed in the meantime, it will still send the cached data..?

Of course, you”re absolutelly right.

But that”s not really the problem in this solution as you can easily implement a Cache eviction mechanism to remove from cache, entities, when changed.

You might like this:

damieng.com/…/dictionaryt-look-up-or-create-m…

Beats me… The only thing i can think of is that if a new person that matches the criteria is added, it won”t be returned if the cached object isn”t null. And, by the way, shouldn”t a more sophisticated way be implemented regarding as to what stays in and what gets dropped from the cache? I have the impression that if a new and entirely different request is made, all cached objects will be discarded even if they shouldn”t. Am i correct assuming that?

I”m sorry but I am not a web developer, so I need to ask a few questions. In your closing paragraph you mention that the cache key is the last name (”cache key aka last name”). Wouldn”t this be a poor choice? The Cache.Add() method states that:

”Calls to this method will fail if an item with the same key parameter is already stored in the Cache. To overwrite an existing Cache item using the same key parameter, use the Insert method.”

You present no code that explains how you implement your caching policy, but if you”re adding one person at a time, you obviously cannot have two individuals with the same LastName because in a very short time you will run out of keys. Why don”t you cache an entire List collection object instead? You can use a single key for the entire collection of ”Persons” and you can work with the list. I”ve seen a few examples of this technique on the Internet.

It would be extremely useful to reveal more code about how you implemented caching to your solution and please let me know if I failed to understand things correctly…

You are absolutely right, a new Person whose last name begins with an "M" won”t appear until the cached item is cleared. And surely there are much more sophisticated solutions to cache, ones that include sliding expirations, eviction policies and so forth, that was not the intention of this post though. I”m planning on showing the problems that a developer faces when caching (using any solution) Linq To SQL entities, and I didn”t need a complex caching mechanism to show just that.

You”re also right in your second comment, the key is wrong because I”m only caching the First Person that his last name begins with an "M" so the rest of the "M" persons will never appear, but in a real word senario I would cache a list of Persons starting with the letter "M" using that cache key so there wouldn”t be a shortage of cache keys. The code hypothetically would look something like that

protectedPerson GetPerson(stringlastNameStartsWith){using(AdventureworksDataContext context =newAdventureworksDataContext()){returnCacheGet<List<Person>>(() => context.Persons.Where(p => p.LastName.StartsWith(lastNameStartsWith)).ToList(),string.Format("Person_LastName_StartsWith:{0}", lastNameStartsWith)).FirstOrDefault();}}Thanks for your comments and check out the next post in the series to see what is the actual point I actually want to make.